Hi Multis,

Anand here, reporting on Nvidia (NVDA).

With Q1 FY2025 earnings out on May 28, the company posted another blowout quarter, sending the stock up more than 5%.

While Apple and Nvidia continue to trade places for the title of world’s most valuable company, I believe 2025 will be the year Nvidia takes the lead — and keeps it.

First, we dive into the earnings and then Kris will take over for a valuation of the stock to know if it’s still a buy or not.

The Numbers

Nvidia reported total revenue of $44.1 billion, up 69% year-over-year. Has it ever happened before in history that the biggest company in the world just grew its revenue by 69%?

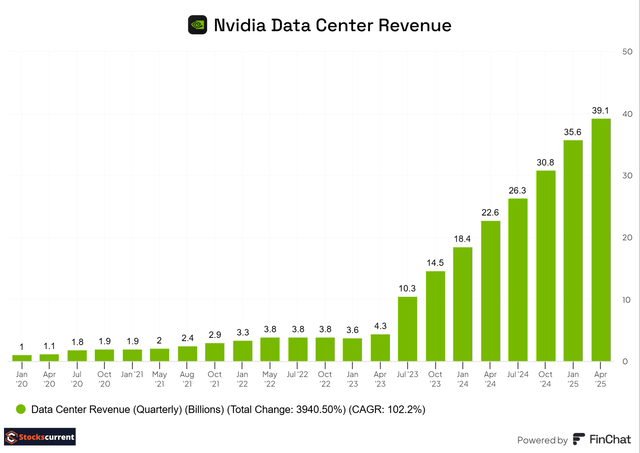

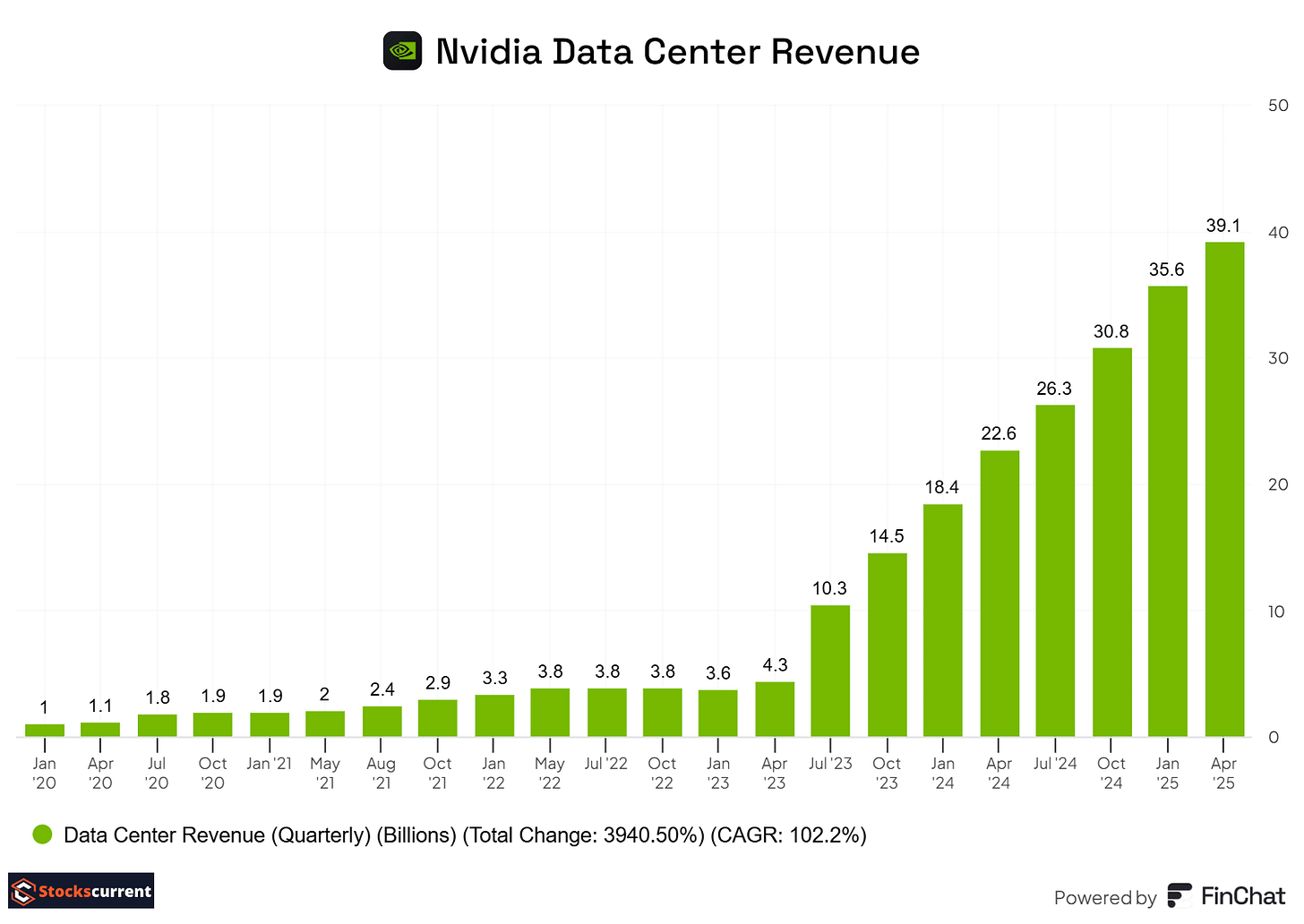

Data Center revenue was $39.1 billion, up 73% year-over-year, driven by AI factory build-outs and Blackwell deployments. Blackwell contributed nearly 70% of the Data Center's compute revenue in the quarter, with the transition from Hopper nearly complete. Blackwell is in full swing. Despite the challenging environment with tariffs and stringent export controls, Nvidia has delivered outstanding revenue growth.

Source: Finchat (if you follow the link, you can get a 15% discount, only $245 for a year for the Plus subscription, or $653 per year for the Pro)

On April 9, 2025, NVIDIA was informed by the US government that a license is required for the export of its H20 products to the Chinese market. H20 has been in the market for more than a year, and the new export rules don't provide any grace period to sell through the inventory.

In Q1, Nvidia recognized $4.6 billion in H20 revenue, which occurred prior to April 9, and couldn't ship $2.5 billion worth of orders, so a total of $7 billion worth of revenue in Q1 just went into the water. The company has to write off the $4.5 billion for the inventory and purchase obligation. Nvidia gave a heads-up that they will write off another $8 billion in Q2 for the H20 orders that they cannot fulfill due to the export controls imposed by the US government. So, a total of $15 billion in impact due to export control. Painful.

But despite this strong headwind, the company posted 69% revenue growth in Q1 and expects $45 billion in revenue in Q2. That just demonstrates the incredible demand.

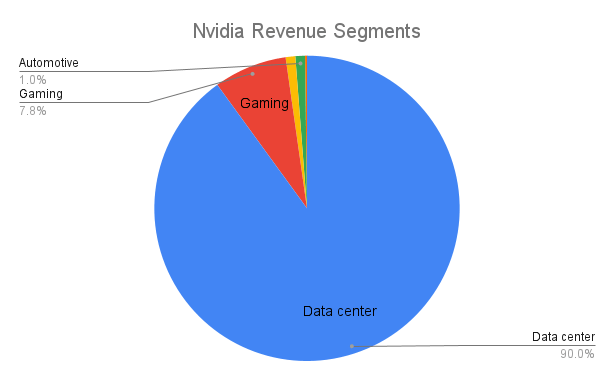

Nvidia segments its revenue into five categories: Data Center, Gaming, Automotive, Professional Visualization, OEM, and others.

Gaming revenue was a record $3.8 billion, increasing 48% sequentially and 42% year-on-year, driven by strong adoption by gamers and creators.

The company is experiencing robust demand (an understatement!) and improved supply. AI is transforming PC, creators, and gamers. Nvidia has the largest footprint for PC developers with a GeForce installed base of 100 million users.

Nvidia also started shipping AI PC laptops, including models capable of running Microsoft's CoPilot+. In Q1 2025, the company brought Blackwell architecture to mainstream gaming with the launch of GeForce RTX 5060 and 5060 Ti, starting at just $299. The RTX 5060 also debuted in laptops, starting at $1,099.

Another reason for the jump in gaming revenue is the recently unveiled Nintendo Switch 2, which leverages NVIDIA's neural rendering and AI technologies, including custom RTX GPUs. Nintendo has shipped over 150 million Switch consoles to date, making it one of the most successful gaming systems in history.

Revenue from professional visualization was $509 million, flat sequentially, and up 19% year-on-year. Tariff-related uncertainty temporarily impacted Q1 systems. Management expects sequential revenue growth to resume in Q2.

Revenue from the Automotive segment was $567 million, down 1% sequentially but up 72% year-on-year. Year-on-year growth was driven by the ramp-up of self-driving across a number of customers and robust end-demand for EVs. Nvidia is partnering with General Motors to build next-gen vehicle factories and robots using NVIDIA AI, simulation, and accelerated computing.

NVIDIA reported GAAP gross margins of 60.5% and non-GAAP margins of 61%. But that includes the one-time $4.5 billion charge. Excluding that, Q1 non-GAAP gross margins would have been 71.3%, which beats the company’s own guidance. So if you're seeing headlines like “NVIDIA’s margins are falling,” don’t be misled. The dip is tied to U.S. export restrictions, not operational weakness. Expect a similar margin impact next quarter for the same reason.

NVIDIA posted net income of $18.8 billion for the quarter, up 26% year over year. That translates to $0.76 in GAAP earnings per diluted share, a 27% increase from last year. On a non-GAAP basis, EPS came in at $0.81. The strong bottom-line growth demonstrates again just how profitable NVIDIA’s business model has become.

Back in 2022, analysts expected NVIDIA to earn just $0.65 per share for the entire fiscal year 2025 under the base-case scenario. Fast forward to today, and NVIDIA just delivered $0.76 in GAAP EPS in Q1 alone.

The company is now guiding for full-year earnings between $3.20 and $3.25, blowing past even the most optimistic forecasts. That kind of acceleration is staggering. It’s a clear signal of how fast NVIDIA’s business is scaling (and how wrong the early models were).

For Q1 2025, the company reported a record free cash flow of $26.2 billion, representing almost 71.75% of the total revenue.

Source: Finchat

Guidance

Management guided next quarter’s revenue to be $45 billion, which factors in an $8 billion hit from lost H20 revenue due to U.S. export controls.

Gross margins are expected to land between 71.8% and 72%, in line with expectations. Operating expenses are projected at $5.7 billion on a GAAP basis and $4 billion on a non-GAAP basis.

Notably, management did not provide full-year revenue guidance this quarter.

Insights from the call

Impact on China business

NVIDIA has identified a $15 billion revenue impact tied to U.S. export restrictions on its China business. Management had previously estimated a $50 billion long-term opportunity in the Chinese market, so this is a meaningful hit. While China is not NVIDIA’s primary revenue driver, it still plays a significant role in the bottom line.

The important context here is that this disruption is geopolitical, not operational. Despite the headwinds, NVIDIA has continued to grow both revenue and EPS far beyond expectations, underscoring the strength and resilience of its core business.

Nvidia CEO Jensen Huang on the call:

China is one of the world's largest AI markets and a springboard to global success. With half of the world's AI researchers based there, the platform that wins China is positioned to lead globally.

We cannot reduce Hopper further to comply. We are exploring limited ways to compete, but Hopper is no longer an option.

China's AI moves on with or without US chips. It has the compute to train and deploy advanced models.

The question is not whether China will have AI; it already does. The question is whether one of the world's largest AI markets will run on American platforms. Shielding Chinese chip makers from US competition only strengthens them abroad and weakens America's position. Export restrictions have spurred China's innovation and scale. The AI race is not just about chips. It's about which stack the world runs on.

Most NVIDIA investors aren’t overly concerned about the $15 billion write-off tied to China.

The bigger issue is strategic. By cutting off Chinese chipmakers from U.S. technology, the export controls may actually accelerate China’s domestic innovation and global competitiveness. It’s a double-edged sword.

Jensen Huang has been candid about this risk, acknowledging that while NVIDIA is a clear winner today, the long-term geopolitical landscape is unpredictable. China may be behind for now, but it’s rapidly moving to reduce its reliance on the U.S. tech stack and could eventually export its own AI solutions to allied nations. The direction of this risk will only become clear over time. For now, NVIDIA continues to execute and expand globally with remarkable precision.

Blackwell and NVLinks are selling like hotcakes:

Blackwell is not just ramping, it's ramping faster than any product in NVIDIA’s history. It drove a massive 73% year-over-year jump in Data Center revenue and already accounts for nearly 70% of the segment’s compute revenue this quarter. Major hyperscalers are each deploying around 1,000 NVL72 systems per week, equivalent to 72,000 Blackwell GPUs, and this pace is still accelerating.

The takeaway? Demand for Blackwell is not just strong, it’s explosive and it’s setting the tone for NVIDIA’s next phase of growth.

The GB200 NVL represents a major architectural leap, purpose-built for data center-scale AI workloads.

At its core is NVIDIA’s fifth-generation NVLink, a high-speed interconnect that delivers 14 times the bandwidth of PCIe Gen 5. Each NVLink 72 rack is a beast, packing 1.2 million components, weighing nearly 2 tons, and capable of moving 130 terabytes per second, roughly the same as peak global Internet traffic.

This is not only a powerful system, but it's also incredibly complex to manufacture, configure, and deploy. Despite that, NVLink is already proving to be a new growth engine. In Q1 alone, shipments topped $1 billion. At COMPUTEX, NVIDIA took it a step further by unveiling NVLink Fusion, allowing hyperscalers to build semi-custom GPUs and accelerators that plug directly into the NVIDIA platform. This opens the door to even deeper integration and performance at scale.

The management team provided updates on Blackwell Ultra or GB300 (both are the same). A sampling of GB300 systems began earlier this month at the major CSPs, and the company expects production shipments to commence in the second half of 2025. GB300 will utilize the same architecture, physical footprint, and electrical and mechanical specifications as GB200. They can also leverage the learnings from Hopper to facilitate a smooth transition to Blackwell.

NVIDIA has committed to an annual GPU release cadence through 2028, a pace that will be tough for competitors to match. It’s reminiscent of the early iPhone years, but with even greater complexity. Building and deploying a cutting-edge GPU is at least 10 times harder than shipping a smartphone; yet, NVIDIA is confident it can maintain this pace.

That kind of consistency is not just about engineering talent, it’s a testament to how process innovation and execution excellence are deeply embedded in the company’s DNA.

Sharp demand for inference

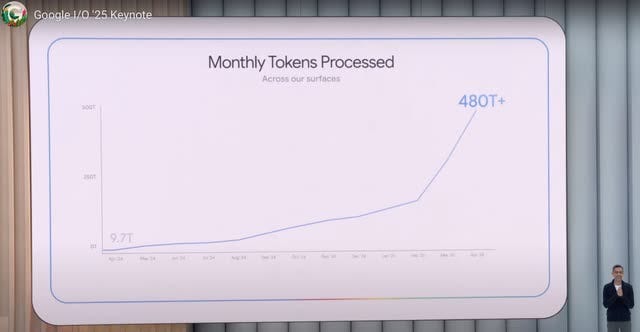

NVIDIA is seeing a sharp surge in inference demand, driven by explosive growth in token generation across major AI platforms.

OpenAI, Microsoft, and Google are all reporting significant jumps, with Alphabet noting it now processes 480 trillion tokens per month, up from just 9.7 trillion a year ago. That's a staggering 50x increase.

Startups serving inference workloads on Blackwell are also scaling rapidly, tripling both their token throughput and revenue from high-value reasoning models. This step-function leap in inference activity is a powerful tailwind for NVIDIA, reinforcing its central role in powering the next wave of AI deployment.

Source: Google I/O 2025

CFO Colette Kress mentioned that Microsoft processed over 100 trillion tokens in Q1, a fivefold increase on a year-over-year basis.

Reasoning is one of the most compute-intensive operations in AI, and the scaling laws continue to apply, not just for training, but increasingly for inference.

With each new generation of large reasoning models, the unit cost of inference drops, but usage grows exponentially.

This dynamic is fueling a powerful economic flywheel: lower per-unit inference costs lead to broader adoption, which in turn drives up total compute and infrastructure demand.

Even as inference becomes cheaper, total spending rises, reinforcing the need for large-scale, GPU-intensive infrastructure. For now, AI remains a capital-heavy game, and NVIDIA is at the center of it.

Pipeline projects that are backing Nvidia's moat

Many investors are still trying to wrap their heads around NVIDIA’s staggering numbers and try to decide what is substance and what's hype.

But one thing is clear: the Data Center segment is the undisputed growth engine. While other business units contribute, it's the AI factory buildout that’s driving the narrative.

Nearly 100 NVIDIA-powered AI factories are expected to be in motion by the end of Q1 2025, signaling an acceleration in both scale and deployment speed.

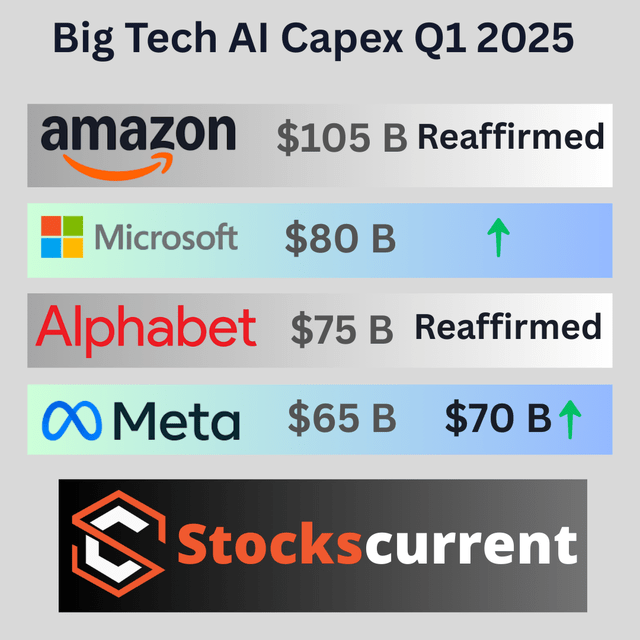

Despite concerns after the DeepSeek moment, hyperscalers haven’t pulled back. In fact, their data center CapEx is still rising, underscoring the urgency and conviction behind AI infrastructure investments.

Source: Author

GPU demand is selling like hotcakes, powered by hyperscaler CapEx and mega-projects like OpenAI’s Stargate and xAI’s Colossus. The AI factory race is moving faster than anyone expected.

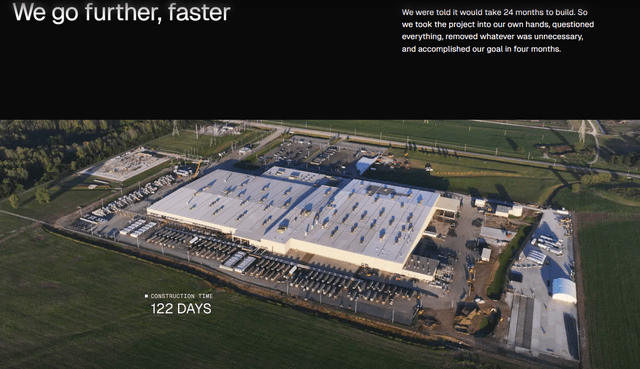

Case in point: xAI turned a gutted facility in Memphis into a fully operational data center in just 122 days. Now, they’re going even bigger, building a 1-million GPU supercomputer cluster near Memphis, separate from their existing 200,000 GPU setup. That build is expected to wrap in just 6 to 9 months. The pace and scale of deployment are staggering, and NVIDIA is at the center of it all.

Source: x.ai

Stargate has already started building the AI factory in Abilene, Texas. For the updates on the Stargate project, please watch the video here.

Another recent development is that Nvidia led the announcement of a 500-megawatt AI infrastructure project in Saudi Arabia and a 5-gigawatt AI campus in the U.A.E.

In June, CEO Jensen Huang is going on tour in Europe, and there will be many more AI factory announcements coming from that trip.

These are not speculative ventures. They are massive physical infrastructure buildouts, and while timelines or plans may shift, the projects themselves are not going away.

What makes them even more significant is that they are not being built for a single company, but for nations. This is infrastructure at the scale of power plants or telecom networks. Countries are betting on AI as a strategic asset, and NVIDIA is right at the heart of that transformation.

Final thoughts

The AI race is a long-term tailwind, and Nvidia is the clear winner in data center buildouts and GPU deployments.

AI will be larger than mobile and cloud combined. All the astonishing numbers are coming to the realization, and I think it's clear it's just getting started. There will be ups and downs. No one knows how geopolitical risk will play out.

Disclosure: I have owned shares of Nvidia (NVDA) since December 29, 2017

If you like the article, please share your feedback in a comment on the article and follow me on X @anandkhatri.

The Valuation

Hey Multis, Kris here jumping in. Anand broke down the earnings like a pro.

But you probably also really want to know whether Nvidia's stock’s a buy right now. To judge that, the valuation plays its role.